So you’ve built your model, and now you want to deploy it for testing or to show it to someone like your mom or grandpa, who may not be comfortable using a collaborative notebook? No worries at all; you can still impress them with your mighty machine-learning skills 😂.

We going to use the model we built here. Before it can be deployed we need to export it, so we need to add several files to the notebook:

learn.export()This will create ‘export.pkl’ file with our model. This is actually a zip archive with model architecture and the trained parameters. You can unzip it and check what’s inside.

Now we will have to jump through some hoops. First of all, we need to upload our model to GitHub. The problem is — GitHub doesn’t allow files larger than 25 megabytes. So we will have to split our model file into several chunks:

with open('export.pkl', 'rb') as infile:

chunk_size = 1024 * 1024 * 10

index = 0

while True:

chunk = infile.read(chunk_size)

if not chunk:

break

with open(f'model_{index}.bin', 'wb') as outfile:

outfile.write(chunk)

index += 1Now let’s create a new notebook that will use the model. You need to upload this notebook file along with model files created in the previous step to GitHub. Here is the complete notebook.

Firstly, we need to combine model files back into a single file called ‘model.pkl’:

dest = 'model.pkl'

chunk_prefix = 'model_'

chunk_size = 1024 * 1024 * 10

index = 0

with open(dest, 'wb') as outfile:

while True:

chunk_filename = f'{chunk_prefix}{index}.bin'

try:

with open(chunk_filename, 'rb') as infile:

chunk_data = infile.read()

if not chunk_data:

# No more chunks to read, break out of the loop

break

outfile.write(chunk_data)

index += 1

except FileNotFoundError:

break#load model

dest = 'model.pkl'

learn = load_learner(dest)And create UI widgets to use our model, same as here, using IPython widgets:

out_pl = widgets.Output()

out_pl.clear_output()

btn_upload = widgets.FileUpload()

def on_upload_change(change):

if len(btn_upload.data) > 0 :

img = PILImage.create(btn_upload.data[-1])

with out_pl: display(img.to_thumb(128,128))

btn_upload.observe(on_upload_change, names='_counter')

def on_click_classify(change):

img = PILImage.create(btn_upload.data[-1])

print(learn.predict(img))

btn_run = widgets.Button(description='predict')

btn_run.on_click(on_click_classify)

VBox([widgets.Label('Test your image!'),

btn_upload, btn_run, out_pl])We are going to use Viola to render our app. Voila runs Jupyter notebooks just like the Jupyter notebook server you are using, but it removes all of the cell inputs, and only shows output, along with your markdown cells. Let’s install it:

!pip install voila

!jupyter serverextension enable --sys-prefix voilaYou also need to add one more file to your repository: requirements.txt. We are going to use Binder, and this file is needed to run the model. It should have something like:

fastai

voila

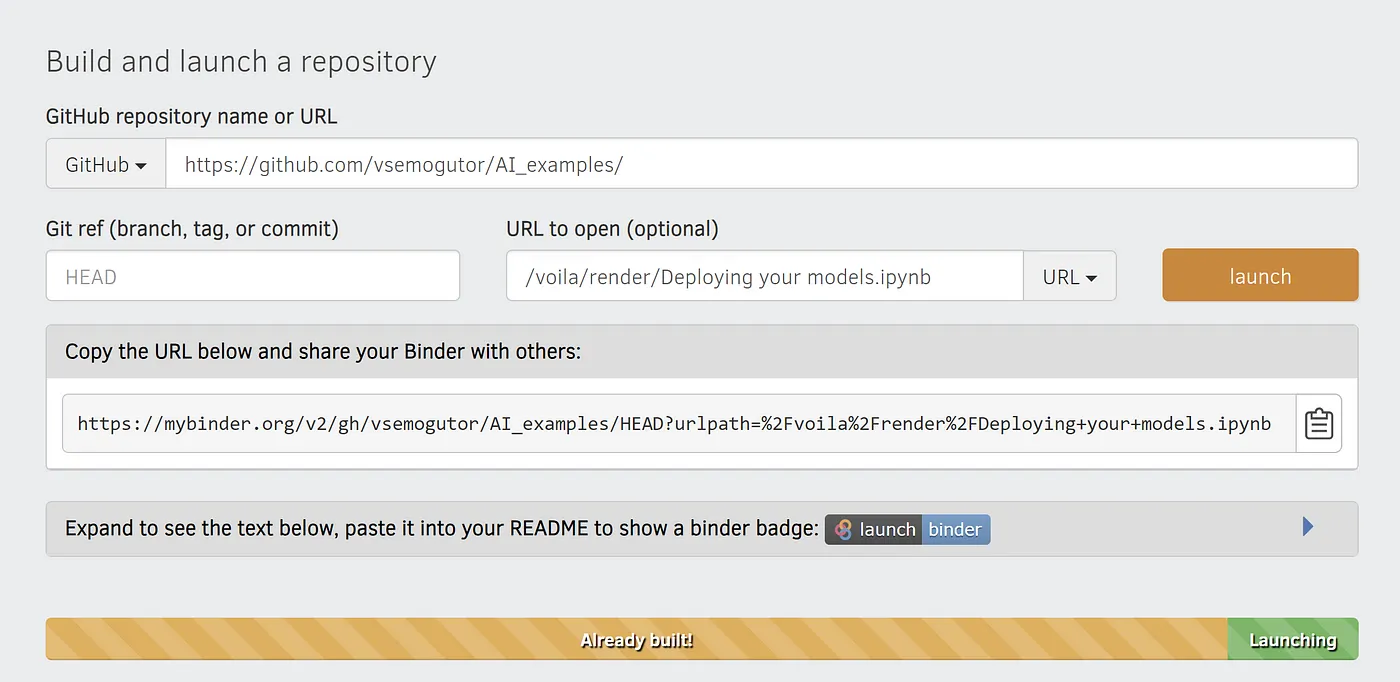

ipywidgetsAfter that, you need to open Binder, point it to your GitHub like that, and click Launch. It can take several minutes for Binder to create and launch a container with your model:

Binder will give you a URL that you can use to launch your app and share with your mom! The web page will look like this:

Congratulations, you have built and deployed a model!

The complete notebook for this article is here.