Not too long ago, everyone eagerly awaited new products from Apple. Today, the world’s attention is focused on another event: OpenAI Dev Day, showcasing the latest ChatGPT developments. Despite its modest, developer-to-developer style presentation, its impact on the future of technology has been immense.

Speculation about upcoming API releases for new models and the potential cost reduction of GPT-4 was rife on social networks, topics previously addressed by Sam Altman, CEO of OpenAI, during the summer. The anticipation surrounding these developments was justified, as the results obtained far exceeded expectations.

So, what has been demonstrated, and what does it mean for all of us?

New GPT-4 Turbo

The first and most important thing is GPT-4 Turbo with 128K context. Until recently, GPT-4 was available in two variants: with context windows of 8K and 32K. There is a model, Claude 2, where the context in the summer already comprised 100 thousand tokens, yet the model itself lags behind its OpenAI competitor despite having a wider window.

Why is this parameter important? It’s simple: the more tokens a model holds in memory, the more documents/dialogs ChatGPT can handle. GPT-4, equipped with 128K parameters, is impressive, nearing the ability to comprehend the novel War and Peace within its context. For application developers, this will enable them to implement more complex scenarios, both within the chat and within applications built on the OpenAI API.

Another milestone is that the model has been updated with data through April 2023. It now has up-to-date information on the latest technological advances and world events.

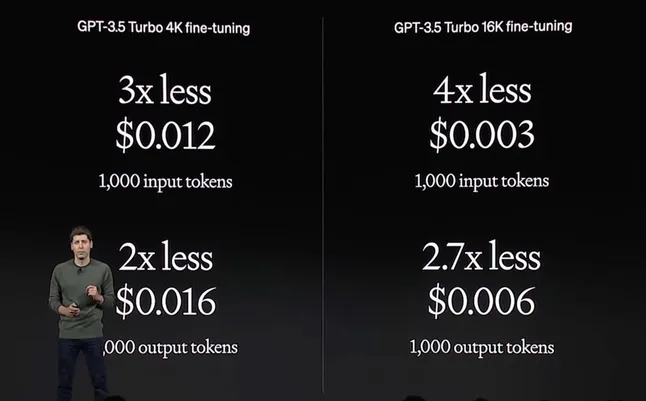

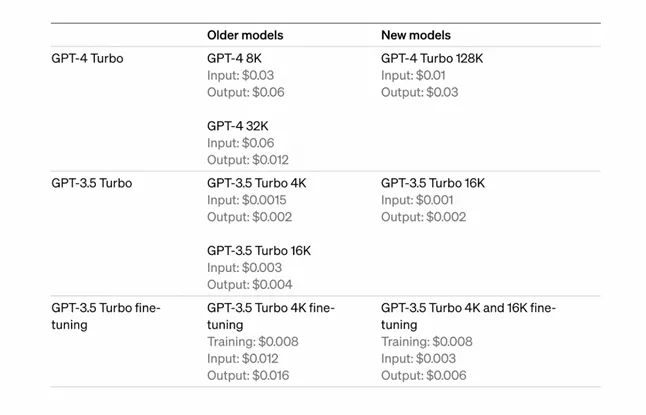

It would seem that there could not have been a better scenario, but while on stage, Sam Altman mentioned, ‘You asked us to make GPT-4 even more affordable, and we’ve accomplished it.’ GPT-4 will now cost, on average, 2.5 times less when accessed via the API. This reduction means that more startups will be able to integrate GPT-4 into their products. Previously, employing GPT-4 was often impractical. For instance, in one of our products, the economics didn’t align with GPT-4, so we opted for GPT-3.5 despite its lower quality.

Along with this, OpenAI has improved GPT-3.5 — the model will also become cheaper on API calls. Model pre-training has also become more affordable.

Cost of fine-tuning GPT-3.5

Also increased rate limits per minute. Previously, to handle the high load on the OpenAI API, users had to employ workarounds with several accounts. Now, you can utilize a significantly higher number of tokens per minute.

Cost of API calls

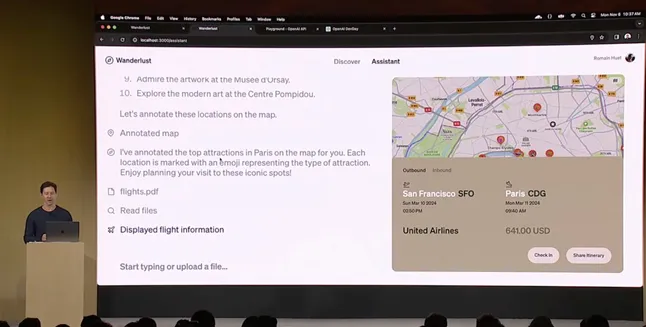

External function calls and JSON mode

ChatGPT can now call external functions and APIs. During a presentation, a live demonstration showcased GPT successfully planning a trip to Paris. It marked places to visit on maps and even booked accommodation through Airbnb. The integration has become even more streamlined. Most significantly, there’s now a JSON mode available: a model in this mode will produce structured JSON by default. Previously, achieving such results necessitated using prompts, but it’s now a built-in feature that, in theory, should reduce errors and prevent model hallucinations.

Demonstration of a function call

These features will be available in both GPT-4 Turbo and GPT-3.5 Turbo. This is particularly significant for GPT-3.5, as the model frequently did not adhere to instructions such as ‘always answer in XML.’ Now with JSON mode it will be easier to use, and with the reduced cost, GPT-3.5 remains as relevant as ever.

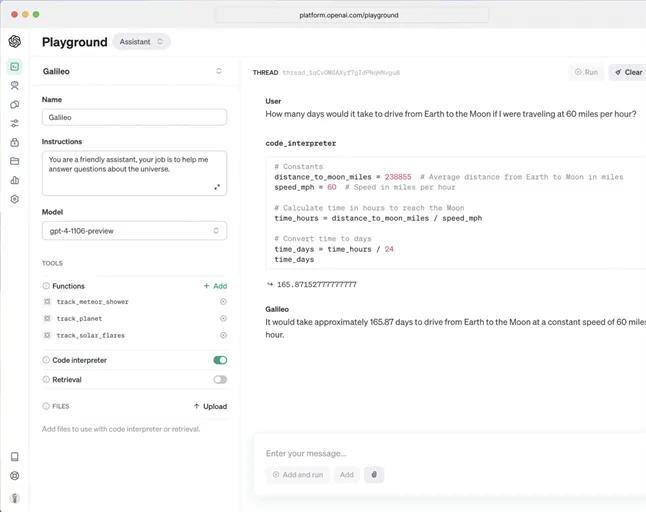

Assistant API and Code Interpreter

The possibilities of using APIs have expanded with the introduction of the Assistant API. The main innovation of this API is the so-called “Threads”, which allow developers not to think about the limitations of a particular context window and add new messages directly to an existing thread. This means that instead of having to manage the context window independently and manually pass it when calling the API, it will now be possible to add a new message directly to a thread. OpenAI will take over the management of this process.

The Code Interpreter and Retrieval features have been added to the API, enabling direct document uploads. Functions such as ‘chat with PDF’ will now be accessible within the API. The Code Interpreter will execute code within GPT to perform calculations or file parsing. While developers haven’t fully experienced the capabilities of code models yet, it will take some time before we can upload our startup’s code and ask the model to fix bugs, as well as the code of a legacy project.

By the way, you can try all of this in the new Playground, which allows you to use the API in no-code mode.

Assistant API Playground

A model that sees, speaks, and paints pictures

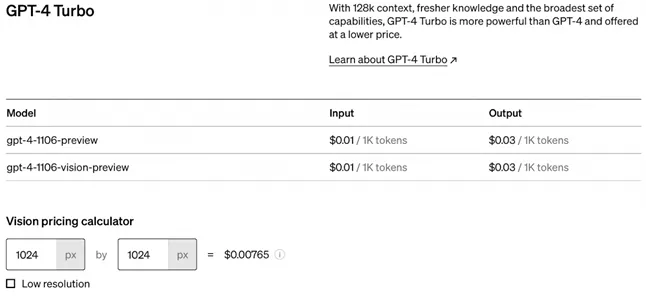

GPT-4 Turbo now includes multimodal capabilities in the API: the modes that were available in the chat now allow it to recognize images with Vision and generate new ones in conjunction with DALL-E 3. This opens up even more avenues for integration through the API. Charging will be done separately: for a 1024×1024 resolution image, OpenAI has set a price of $0.007.

Cost of using new models

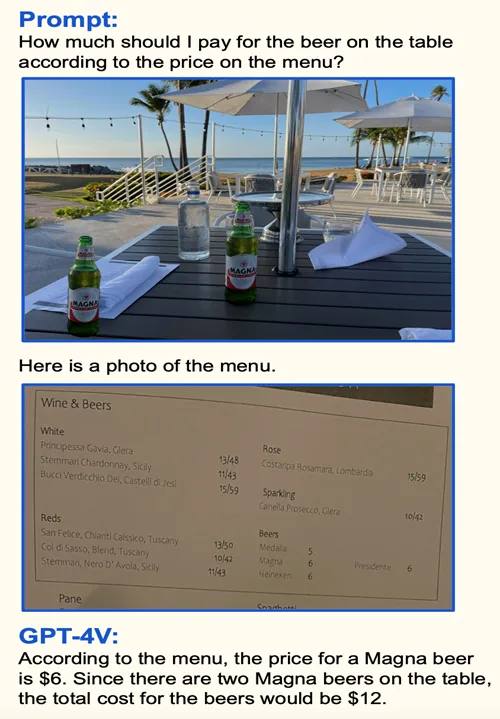

GPT-4-Vision is the very model that can calculate how much you should pay for dinner by taking a picture of the menu and the food on the table.

GPT-4 Vision operation example

The Text-to-Speech API, designed to synthesize human-like voices, is now available as a standalone feature. Users of the application have already appreciated the capabilities of the “OpenAI talker.” Now, all these features are accessible through an API, enabling the creation of applications that support voice interactions with people. However, a significant challenge remains in the form of latency, which poses a hurdle for seamless dialogue due to the noticeable delay between the model’s response and the user’s question. This delay has been a major obstacle, impacting startups like air.ai, whose fate is now uncertain.

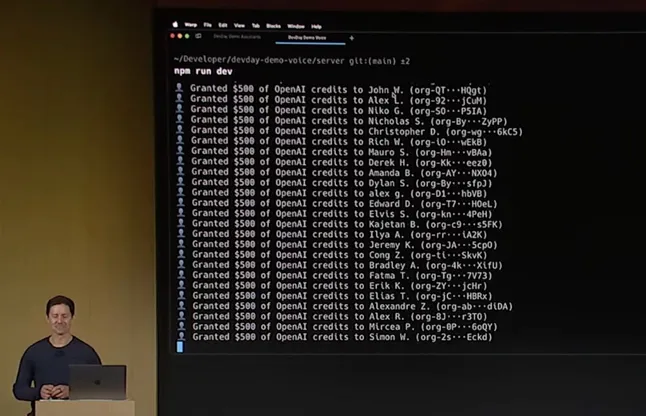

During the presentation, we witnessed the integration of these technologies in the form of a conference assistant. The presenter made a bold move, asking the model to grant $500 in credits to each attendee for an OpenAI account. The assistant converted the voice commands into text, activated necessary features, gathered attendee data, and distributed credits to their respective OpenAI organization IDs. With a wealth of API features and functionality, these credits are anticipated to be highly sought after.

Distributing credits to participants

New release of Whisper v3 is now on GitHub

One of the leading models for audio transcription, Whisper from OpenAI, is available both as Open Source and via API. It is particularly impressive that the technology is not only evolving but also remains open source, paving the way for its integration even into corporate products. Recently, there was news about a team that was able to optimize Whisper, accelerating its performance by sixfold with minimal loss of accuracy.

ChatGPT’s functionality update, GPTs builder, marketplace and new interface

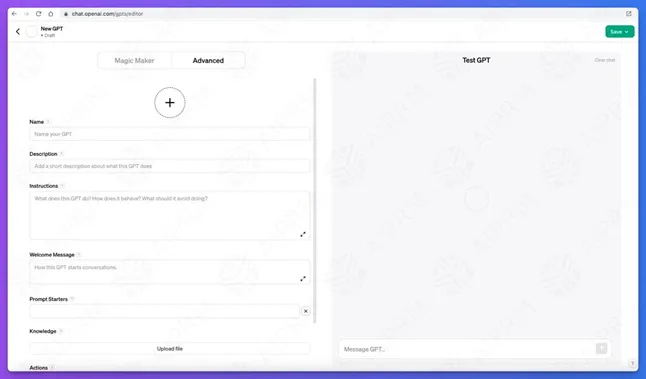

We were introduced to a constructor that enables the creation of skills akin to those used by Alice from Yandex, but built upon ChatGPT — simple and without the necessity of coding. It is known as GPTs

GPTs Builder

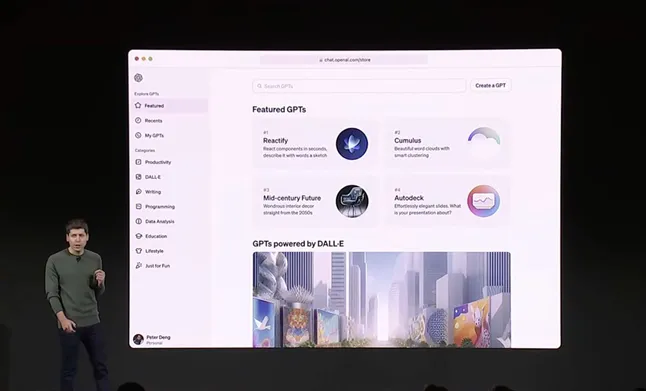

OpenAI plans to release a marketplace later this year for skills, comparable to Apple’s App Store. While entities like Alexa are often perceived by users as more for entertainment, in the case of GPTs, the market potential appears to be significantly broader. In just a year, ChatGPT has evolved into an everyday tool for millions of users, likely stimulating developers to craft a myriad of diverse assistants. Hopefully, the monetization policy will surpass that of Apple’s.

GPTs Marketplace

In addition, the need for prompt engineering as a mandatory skill for interacting with ChatGPT may be reduced or even eliminated. The community will quickly provide a large selection of preconfigured chat rooms for developers, architects, creatives and other specialists, where requests can be sent in a simple form and without the use of complex prompts.

GPTs Examples

The interface has also been updated. Previously, the ChatGPT interface hadn’t changed much since its initial release and might have seemed outdated. However, it now appears in a more modern form.

New ChatGPT interface

Copyright protection for AI products

I would like to acknowledge the OpenAI initiative aimed at supporting customers who may encounter legal challenges due to generated content. The company commits to assisting in legal proceedings and potentially covering fines, highlighting its customer-focused approach. This illustrates the company’s commitment to involving as many individuals as possible in the development of GPT-based products.

Microsoft has recently launched a similar initiative called the Copilot Copyright Commitment. This commitment ensures that customers can utilize Copilot services and outcomes without concerns about copyright claims. In the event of such claims, Microsoft assumes liability, offering legal defense and coverage for potential legal fees and compensation, on the condition that customers adhere to the built-in content filters and restrictions.

Summary

Yesterday, our world saw a company of just 400 people release a dozen innovations. GPT assistants, updated interfaces for prompts, improved speech synthesis — all of these innovations devalue many projects and perhaps even companies that have emerged in recent years. AI lawyers or robot salespeople working from simpler models will have a hard time competing with a small plug-in assistant with a strong fundamental GPT-4 Turbo model behind it. Teams that build their own models and strive for parity with ChatGPT version 3.5 will now find it even harder to catch up with the leader. The multimodal GPT-4 Turbo Vision with its open API opens up new opportunities for developers to create applications capable of analyzing text, images, and schemas. And we will face new vulnerabilities, data leakage scandals, and security threats like DAN injection

Sam Altman, CEO of OpenAI, has repeatedly mentioned in interviews that the company’s mission is to create AGI — Artificial General Intelligence, a level of artificial intelligence at which machines will be able to solve problems as well as humans, whether it’s questions of logic, learning, or language perception. We’ve stepped even closer to that goal.

The future belongs to AI. It is steadily entering every aspect of our daily lives. On the occasion of ChatGPT’s anniversary, we are once again convinced of its inevitable spread. AI, generative models are evolving at an incredible speed, surpassing many previous technological advances.